Hyper-V: Receive Side Scaling (RSS-Settings (VMQ)): Unterschied zwischen den Versionen

Admin (Diskussion | Beiträge) |

Admin (Diskussion | Beiträge) |

||

| Zeile 17: | Zeile 17: | ||

=== RSS === | === RSS === | ||

| + | Receive Side Scaling (RSS) is a very important aspect in networking on Windows. RSS makes sure that incoming network traffic is spread among the available processors in the server for processing. If we do not use RSS, network processing is bound to one processor which will limit approximately at 4GBps. Nowadays every NIC has RSS enabled by default, but the configuration is not optimized. Every NIC is configured with “Base Processor” 0, meaning it will start processing on processor 0 together with the others NICs but more importantly it’s the default processor for Windows processes as well. | ||

| + | |||

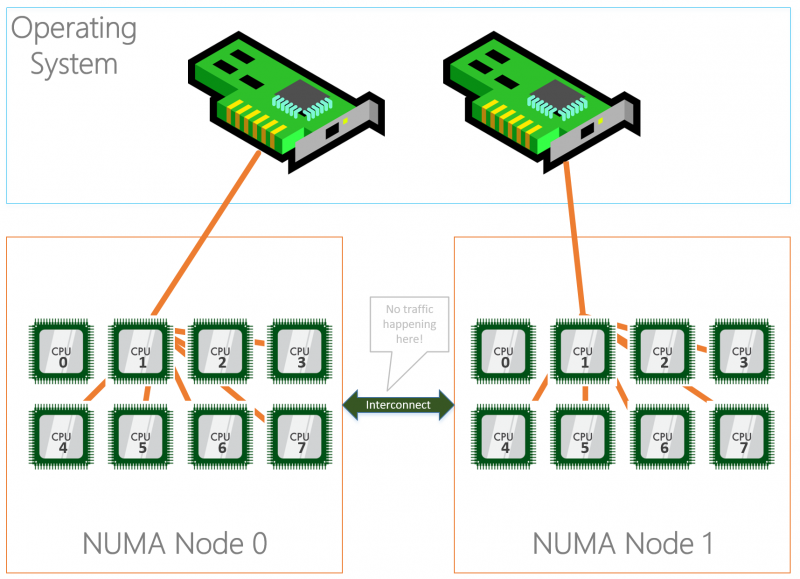

| + | To optimally configure RSS we want to start at processor 1 so we don’t interfere with processes landing default on processor 0. On the other hand, we also want to keep the NUMA node assignment that RSS traffic is not using a processor in NUMA node 0 while the NIC is bound to NUMA node 1.<br> | ||

[[Datei:RSS.png|800px]] | [[Datei:RSS.png|800px]] | ||

=== VMQ === | === VMQ === | ||

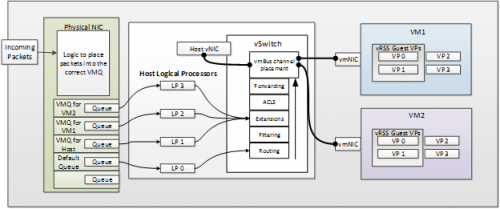

| + | ''With the entrance of virtualization on servers and RSS we hit some limitations because RSS wasn’t built for this “new world”. Long story short, when having a virtual switch on the system where physical adapters are bound to, RSS is disabled. This caused scalability issues that needed to be solved… hello VMQ.'' | ||

| + | |||

| + | The real benefit of VMQ is realized when it comes time for the vSwitch to do the routing of the packets. When incoming packets are indicated from a queue on the NIC that has a VMQ associated with it, the vSwitch is able the direct hardware link to forward the packet to the right VM very very quickly – by passing the switches routing code. This reduces the CPU cost of the routing functionality and causes a measurable decrease in latency. | ||

| + | |||

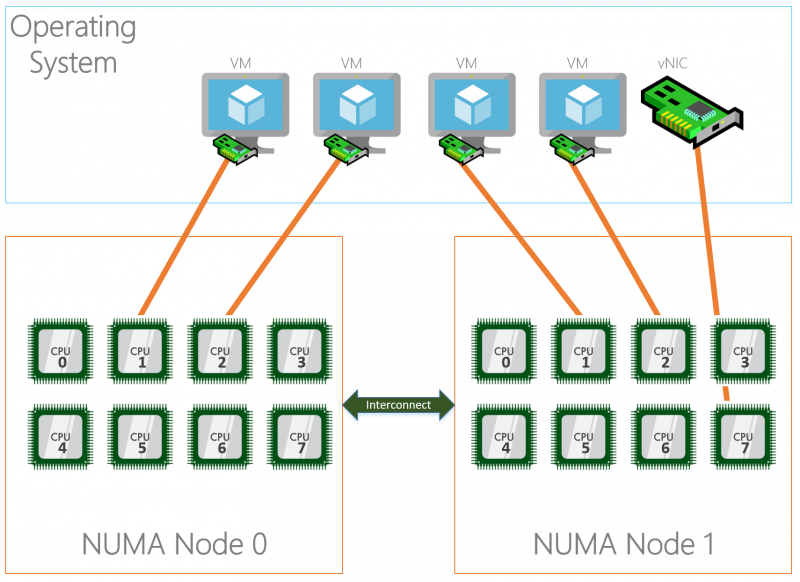

| + | Unlike RSS where incoming traffic is spread among processors, VMQ will create a queue for each mac-address on the system and link that queue to a processor core. This means that a mac-address, which translates to a NIC of a VM or a host vNIC does not have more process power than 1 processor core. This limits the network traffic speed in the same way it does when we do not enable RSS to about 4GBps.<br> | ||

[[Datei:VMQ.png|800px]] | [[Datei:VMQ.png|800px]] | ||

Version vom 9. April 2018, 11:31 Uhr

Receive side scaling (RSS) is a network driver technology that enables the efficient distribution of network receive processing across multiple CPUs in multiprocessor systems.

Note Because hyper-threaded CPUs on the same core processor share the same execution engine, the effect is not the same as having multiple core processors. For this reason, RSS does not use hyper-threaded processors.

In a Hyper-V environment, RSS is no longer applicable! The Hyper-V host in concert with the virtual machines must seek some other method to balance networking compute cost and provide maximal bandwidth to the virtualized NICs. This functionality is delivered by VMQ.

VMQ leverages the same hardware queues on the NIC as RSS, the ones that interrupt different cores. However with VMQ the filters and logic associated with queue distribution of the packets is different. Rather than one physical device utilizing all the queues for its networking traffic, these queues are balanced among the host and VMs on the system.

Inhaltsverzeichnis

[Verbergen]Erklärung

Standardmässig werden Live Migration in Hyper-V 2016 mit dem Core 0 auf allen NUMA-Nodes gerechnet.

Da in Core 0 ebenfalls die Parent Partition bzw. das Management OS auf Core 0 läuft, kann es zu Last Problemen während Live Migrationen von VMs kommen.

Das heisst wenn eine oder mehrere VMs Live migriert werden, wird Core 0 ausgelastet. Falls der Core zu 100% ausgelastet wird, kann die Parent Partition bzw. das Management OS nicht mehr vernünftig rechnen und der Hyper-V Node stützt ab.

Um dies zu verhindern gibt es die RSS-Settings. Diese weisen die sogenannten Queues (oder auch VM-Queues) bestimmten Cores zu. Somit wird verhindert das der Core 0 durch Live Migrationen ausgelastet wird.

RSS

Receive Side Scaling (RSS) is a very important aspect in networking on Windows. RSS makes sure that incoming network traffic is spread among the available processors in the server for processing. If we do not use RSS, network processing is bound to one processor which will limit approximately at 4GBps. Nowadays every NIC has RSS enabled by default, but the configuration is not optimized. Every NIC is configured with “Base Processor” 0, meaning it will start processing on processor 0 together with the others NICs but more importantly it’s the default processor for Windows processes as well.

To optimally configure RSS we want to start at processor 1 so we don’t interfere with processes landing default on processor 0. On the other hand, we also want to keep the NUMA node assignment that RSS traffic is not using a processor in NUMA node 0 while the NIC is bound to NUMA node 1.

VMQ

With the entrance of virtualization on servers and RSS we hit some limitations because RSS wasn’t built for this “new world”. Long story short, when having a virtual switch on the system where physical adapters are bound to, RSS is disabled. This caused scalability issues that needed to be solved… hello VMQ.

The real benefit of VMQ is realized when it comes time for the vSwitch to do the routing of the packets. When incoming packets are indicated from a queue on the NIC that has a VMQ associated with it, the vSwitch is able the direct hardware link to forward the packet to the right VM very very quickly – by passing the switches routing code. This reduces the CPU cost of the routing functionality and causes a measurable decrease in latency.

Unlike RSS where incoming traffic is spread among processors, VMQ will create a queue for each mac-address on the system and link that queue to a processor core. This means that a mac-address, which translates to a NIC of a VM or a host vNIC does not have more process power than 1 processor core. This limits the network traffic speed in the same way it does when we do not enable RSS to about 4GBps.

VMQ-Settings setzen

# VMQ aktivieren Set-NetAdapterVMQ -Name <NIC-Name> -Enabled # RSS aktivieren Set-NetAdapterRss -Name <NIC-Name> -Enabled # VMQ-Settings setzen Set-NetAdapterVmq -Name <NIC-Name> -BaseProcessorNumber <erster Core, nicht Core 0> -MaxProcessors <anzahl Cores> -MaxProcessorNumber <letzer Core> -NumaNode <NUMA-Node>

Die Anzahl Queues können ebenfalls mit dem Parameter -NumberOfReceiveQueues gesetzt werden. Hier wird empfohlen die Einstellungen auf Auto zu belassen.

Wenn genug Cores pro CPU vorhanden sind wird für eine 1Gig NIC -> 8 Queues empfohlen und für eine 10Gig NIC und höher -> 16 Queues.

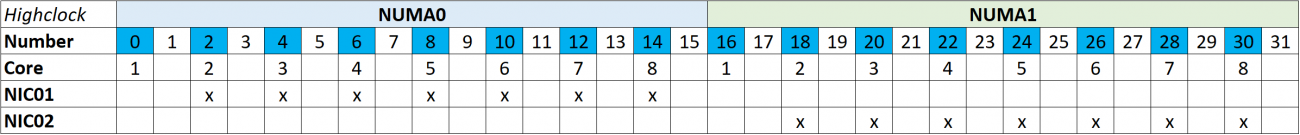

VMQ-Settings Beispiel (optimal, bei insgesamt 32 logischen Cores)

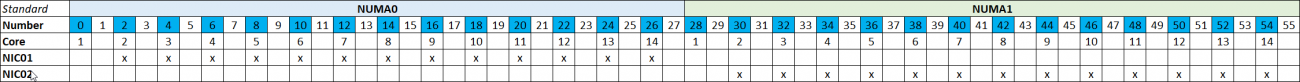

Wir haben einen Server mit folgender Hardware:

- 2 CPUs (sprich 2 Sockets) mit je 8 Cores (+ Hyperthreading aktiviert = 16 logische Cores pro NUMA-Node)

- 2 NICs (in einem Team/Bond)

Die Optimale Verteilung der Queues währe folgende:

Blau markiert die physischen Cores.

Es dürfen keine Hyperthreadete Cores verwendet werden!

Die erste NIC verteilen wir auf den ersten NUMA-Node (NUMA-Node 0), aber wir lassen Core 0 aus, sprich der erste Core im NUMA-Node 0)

Die zweite NIC verteilen wir auf den zweiten NUMA-Node (NUMA-Node 1), aber wir lassen Core 0 aus, sprich der erste Core im NUMA-Node 1)

VMQ-Settings setzen

Set-NetAdapterVmq -Name NIC01 -Enabled Set-NetAdapterVmq -Name NIC02 -Enabled Set-NetAdapterVmq -Name NIC01 -BaseProcessorNumber 2 -MaxProcessors 7 -MaxProcessorNumber 14 -NumaNode 0 Set-NetAdapterVmq -Name NIC02 -BaseProcessorNumber 18 -MaxProcessors 7 -MaxProcessorNumber 30 -NumaNode 1

VMQ-Settings Beispiel (optimal, bei insgesamt 56 logischen Cores)

Wir haben einen Server mit folgender Hardware:

- 2 CPUs (sprich 2 Sockets) mit je 14 Cores (+ Hyperthreading aktiviert = 28 logische Cores pro NUMA-Node)

- 2 NICs (in einem Team/Bond)

Die Optimale Verteilung der Queues währe folgende:

Blau markiert die physischen Cores.

Es dürfen keine Hyperthreadete Cores verwendet werden!

Die erste NIC verteilen wir auf den ersten NUMA-Node (NUMA-Node 0), aber wir lassen Core 0 aus, sprich der erste Core im NUMA-Node 0)

Die zweite NIC verteilen wir auf den zweiten NUMA-Node (NUMA-Node 1), aber wir lassen Core 0 aus, sprich der erste Core im NUMA-Node 1)

VMQ-Settings setzen

Set-NetAdapterVmq -Name NIC01 -Enabled Set-NetAdapterVmq -Name NIC02 -Enabled Set-NetAdapterVmq -Name NIC01 -BaseProcessorNumber 2 -MaxProcessors 13 -MaxProcessorNumber 26 -NumaNode 0 Set-NetAdapterVmq -Name NIC02 -BaseProcessorNumber 30 -MaxProcessors 13 -MaxProcessorNumber 54 -NumaNode 1

Weitere Informationen:

RSS: https://docs.microsoft.com/en-us/windows-hardware/drivers/network/introduction-to-receive-side-scaling

VMQ Best Practise: https://blogs.technet.microsoft.com/networking/2016/01/04/virtual-machine-queue-vmq-cpu-assignment-tips-and-tricks/

Optimizing Network: http://www.darrylvanderpeijl.com/windows-server-2016-networking-optimizing-network-settings/

SAP: https://blogs.msdn.microsoft.com/saponsqlserver/2012/01/11/network-settings-network-teaming-receive-side-scaling-rss-unbalanced-cpu-load/